Computers and computing devices like mobile, laptops, tablets, etc., have become integral to our life. While these devices simplify our day to day tasks by providing faster computational power, there are specific tasks where the human brain performs much better than machines (e.g., “recognizing a face,” “identifying a voice,” “learning to perform tasks like cooking, riding etc.,” or “playing games like chess.”

Artificial Intelligence aims to provide machines with human-like Intelligence. Formally it is defined as

“the science and engineering of making intelligent machines, especially intelligent computer programs. It is related to the similar task of using computers to understand human Intelligence, but AI does not have to confine itself to methods that are biologically observable.”

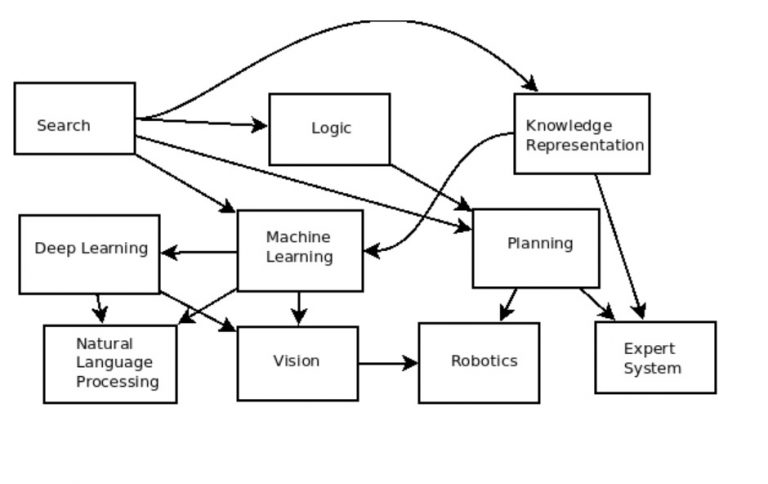

While the efforts in Artificial Intelligence have been going on for more than six decades, more recently, the availability of massive data available online has led to a massive rise in the development of new tools and technologies for AI. Broadly the various tasks of AI and their interdependence can be described as shown in the figure 1.

As shown in Figure 1, the traditional AI systems were founded around Knowledge described in the first order and second-order logic systems. The decision-making involved heuristic-based search techniques which can make the best choice. Search and optimization remain at the core of traditional AI systems. Such systems found application in planning, Expert systems and robotics.

More recently, the evolution of the world wide web and the availability of large amounts of data on the internet pushed the field towards data-driven techniques. This requires a system to learn from the available data and make intelligent decisions. Machine learning is the branch of AI which deals with making machines learn from the data. While traditional AI systems deal with statistical and rule-based approaches to learn from data, more recently, large-scale neural network-based deep learning techniques are becoming the de facto standard to develop sophisticated, intelligent systems.

Various domains of AI mentioned above find a wide range of applications in Natural Language Processing, Computer Vision, Robotics and Expert System. The increasing amount of data on the internet opens the avenues of data science. The data available in different formats, e.g., audio, video, or text, are used to make intelligent business decisions.

Following are brief summaries of different domains.

1. Search

Search algorithms form the core of such Artificial Intelligence programs. Intelligent Search algorithms solve many problems like gaming and puzzle-solving, route and cost optimizations, action planning, knowledge mining, software and hardware verification, theorem proving etc. In a way, many of the AI problems can be modelled as a search problem where the task is to reach the goal from the initial state via state transformation rules. This way the search space is defined as a graph (or a tree) and the aim is to reach the goal from the initial state via the shortest path, in terms of cost, length, a combination of both etc.

All search methods can be broadly classified into two categories:

- Uninformed (or Exhaustive or Blind) methods: The search is carried out without additional information already provided in the problem statement. Some examples include Breadth First Search, Depth First Search etc.

- Informed (or Heuristic) methods: Here search is carried out by using additional information to determine the next step towards finding the solution. Best First Search is an example of such algorithms.

2. Logic

Logic can be defined as the proof or validation behind any reason provided. Logic is an essential part of AI as it provides the capability of taking any decision based on the current situation. It provides the ability to choose and reason behind selecting or rejecting an option.

In artificial Intelligence, we deal with two types of logics: Deductive logic and Inductive logic

- Deductive logic: In deductive logic, complete evidence is provided about the truth of the conclusion made. Here, the agent uses specific and accurate premises that lead to a specific conclusion. An example of this logic can be seen in an expert system designed to suggest medicines to a patient, given their symptoms.

- Inductive logic: In Inductive logic, the reasoning is done through a ‘bottom-up’ approach. This means that the agent here takes specific information and then generalizes it for the sake of complete understanding. An example of this can be seen in natural language processing, where an agent places an entire sentence in a latent embedding space that can be used as a proxy for sentence meaning.

3. Knowledge Representation

Knowledge Representation in AI describes the representation of knowledge. Basically, it is a study of how the beliefs, intentions, and judgments of an intelligent agent can be expressed suitably for automated reasoning. One of the primary purposes of Knowledge Representation includes modeling intelligent behavior for an agent.

Knowledge Representation and Reasoning (KR, KRR) represents information from the real world for a computer to understand and then utilize this knowledge to solve complex real-life problems like communicating with human beings in natural language. Knowledge representation in AI is not just about storing data in a database, it allows a machine to learn from that knowledge and behave intelligently like a human being.

There are 5 types of Knowledge such as:

- Declarative Knowledge: It includes concepts, facts, and objects and expressed in a declarative sentence.

- Structural Knowledge: It is a basic problem-solving knowledge that describes the relationship between concepts and objects.

- Procedural Knowledge: This is responsible for knowing how to do something and includes rules, strategies, procedures, etc.

- Meta Knowledge: Meta Knowledge defines knowledge about other types of Knowledge.

- Heuristic Knowledge: This represents some expert knowledge in the field or subject.

4. Machine Learning

Machine learning (ML) deals with making computers learn from that, so that they can make accurate predictions without being explicitly programmed for the task. Recent growth of digital data available on the internet, made it possible to develop sophisticated systems that learn from the available data and make predictions for the unseen new data. The learning techniques can broadly be divided into two categories: Supervised learning and unsupervised learning.

- Supervised Learning: In supervised learning, the system, the actual output for the given input is known in advance. The algorithm makes use of the known output to train the system. Once the system is trained, it can predict new input for which the output is not known in advance.

- Unsupervised Learning: In an unsupervised learning setup, the output for the given input is not known in advance. The unsupervised algorithms use various similarity or dissimilarity measures to group related data. Clustering technics to group similar output are examples of Unsupervised learning.

5. Deep Learning

Deep Learning is a type of Machine Learning inspired by the structure of the human brain. Deep learning algorithms attempt to draw similar conclusions as humans would by continually analyzing data with a given logical structure. It uses a large structure of Artificial Neural Network which mimics behavior of human neurons.

The two famous deep learning structures are Convolutional Neural Network (CNN) and Recurrent Neural Network (RNN). Convolutional Neural Networks make use of multiple layers of Convolutional filters which can be used to extract features from the given input. CNN models are particularly useful for Image Processing Applications.

Recurrent Neural Network (RNN) enables repeated use of the neural network structure for applications requiring sequential input data processing. These kinds of models are particularly useful for Text and Audio processing applications.

While recurrent neural networks are beneficial for capturing dependency in the input sequence, it usually requires a rigorous training cycle. More recently, Transformer has emerged as a more efficient alternative to transform an input sequence into an output sequence. It uses encoder-decoder architecture to capture dependency in the input sequence via token-to-token attention.

The Deep Learning techniques have shown remarkable success because of the Computational capability of GPU and large memory devices. More recently, large pre-trained deep learning networks have been developed which can be customized to suit various applications.

6. Planning

Planning is the task of finding a procedural course of action for a declaratively described system to reach its goals while optimizing overall performance measures. Automated planners find the transformations to apply in each given state out of the possible transformations for that state. In contrast to the classification problem, planners provide guarantees on the solution quality.

The planning techniques can be used to solve many real-world problems like, robots and autonomous systems, cognitive assistants, cyber security, service composition.

7. Natural Language Processing

Natural Language Processing (NLP) or Computational Linguistics (CL), is an area of Artificial Intelligence (AI) which deals with providing computers the ability to process and understand natural language. Recent growth in online communication led to the development of NLP tools and techniques that help users in effective communication, such as text suggestions in Chat & Email applications, Automatic Caption generation, ChatBot, Search & recommendation systems. With these tools, NLP has become a part of our day-to-day life. NLP aims to process text data at different levels, e.g. syntactic, semantic, discourse etc. to understand the meaning of the text and also to understand intentions of the user.

8. Vision

While NLP enables computers to process text data, Computer vision is a field of artificial Intelligence (AI) that enables computers to derive meaningful information from digital images, videos and other visual input and take actions or make recommendations based on that information. Computer vision trains machines to process visual input using cameras, data and algorithms. Computer vision is used in industries ranging from energy and utilities to manufacturing and automotive – and the market is continuing to grow. It is expected to reach USD 48.6 billion by 2022. Computer Vision systems usually require high memory and processing capabilities. For example, a system trained to inspect products or watch a production asset can analyze thousands of products or processes a minute, noticing imperceptible defects or issues; the processing can quickly surpass human capabilities.

9. Robotics

Developing Human-like intelligent systems which can perform multiple tasks has been a continuous pursuit of technology. Robotics is a key branch of AI which deals with the development of intelligent systems which can perform multiple tasks and make intelligent decisions. It is an amalgamation of computer science with Electronics and Mechanical Engineering, where computer programs provide the Intelligence necessary to perform multiple tasks.

10. Expert System

An expert system is a computer program that uses AI technologies to simulate a human or an organization’s judgment and behavior with expert knowledge and experience in a particular field.

Typically, an expert system incorporates a knowledge base containing accumulated experience and an inference or rules engine — a set of rules for applying the knowledge base to each particular situation described to the program. The system’s capabilities can be enhanced with additions to the knowledge base or to the set of rules.

Current systems may include machine learning capabilities to improve their performance based on experience, just as humans do.

Expert systems have played a significant role in many industries, including financial services, telecommunications, healthcare, customer service, transportation, video games, manufacturing, aviation, and written communication. Two early expert systems broke ground in the healthcare space for medical diagnoses: Dendral, which helped chemists identify organic molecules, and MYCIN, which helped to identify bacteria such as bacteremia and meningitis, and to recommend antibiotics and dosages.